Summary of How Facial Recognition Systems Work

This article discusses Identix®'s facial recognition software, FaceIt®, which identifies and analyzes facial features by measuring around 80 nodal points such as eye distance and jawline length. The software creates a unique numerical faceprint to compare against a database. Traditional 2D facial recognition methods struggled with variations in lighting and face orientation, leading to inaccuracies, especially in uncontrolled settings. New approaches are needed to improve effectiveness and reduce failure rates in real-world applications.

Parts used in the Facial Recognition Technology:

- FaceIt® software

- Database of stored face images

- Facial landmark/nodal point measurement algorithms

Facial Recognition Technology

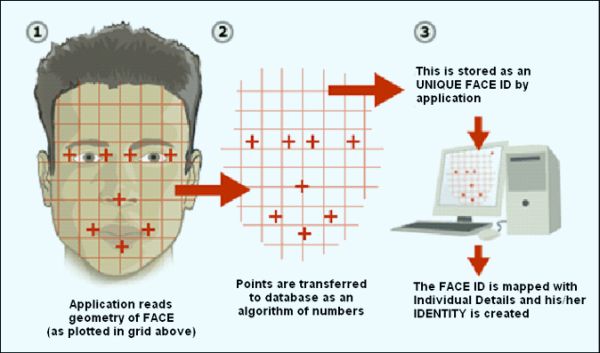

Identix®, a company based in Minnesota, is one of many developers of facial recognition technology. Its software, FaceIt®, can pick someone’s face out of a crowd, extract the face from the rest of the scene and compare it to a database of stored images. In order for this software to work, it has to know how to differentiate between a basic face and the rest of the background. Facial recognition software is based on the ability to recognize a face and then measure the various features of the face.

Every face has numerous, distinguishable landmarks, the different peaks and valleys that make up facial features. FaceIt defines these landmarks as nodal points. Each human face has approximately 80 nodal points. Some of these measured by the software are:

- Distance between the eyes

- Width of the nose

- Depth of the eye sockets

- The shape of the cheekbones

- The length of the jaw line

These nodal points are measured creating a numerical code, called a faceprint, representing the face in the database.

In the past, facial recognition software has relied on a 2D image to compare or identify another 2D image from the database. To be effective and accurate, the image captured needed to be of a face that was looking almost directly at the camera, with little variance of light or facial expression from the image in the database. This created quite a problem.

In most instances the images were not taken in a controlled environment. Even the smallest changes in light or orientation could reduce the effectiveness of the system, so they couldn’t be matched to any face in the database, leading to a high rate of failure. In the next section, we will look at ways to correct the problem.

For more detail: How Facial Recognition Systems Work