Introduction

In our final project, we created a smart voice decoder system that is capable of recognizing vowels in human speech. The audio input is sampled through a microphone/amplifier circuit and analyzed in real time using the Mega644 MCU. The user can record and analyze his/her speech using both hardware buttons and custom commands through PuTTY. In addition, the final product also supports a simple voice password system where the user can set a sequence of vowels as password to protect a message via PuTTY. The message can be decoded by repeating the same sequence via the microphone.

Some of the topics explored in this project are: Fast Walsh Transform, sampling theorems and human speech analysis.

High Level Design

| LED | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 |

| Freq | 0-170 | 170-310 | 310-420 | 420-560 | 560-680 | 680-820 | 820-930 | 930-10000 |

Then they would turn on the LED that corresponded to the most energetic frequency division in the input frequency spectrum. This made us wonder if identifying speech is possibly by a method similar to this.

In fact, with today’s technology, speech recognition is fully realizable and can even be fully synthesized. However, most of the software that deals with speech recognition require extensive computation and are very expensive. With the limited computation power of mega644 and a $75 project budget, we wanted to make a simple, smart voice recognition system that is capable of recognizing simple vowels.

After careful research and several discussions with Bruce, we found that vowels can be characterized by 3 distinct peaks in their frequency spectrum. This means if we perform a transform to input speech signal, the frequency spectrum profile will contain characteristic peaks that correspond to the most energetic frequency component. Then if we check to see if the 3 peaks in the input fall in the ranges we defined for a specific vowel, we will be able to deduce is that vowel component was present or not in the user’s speech.

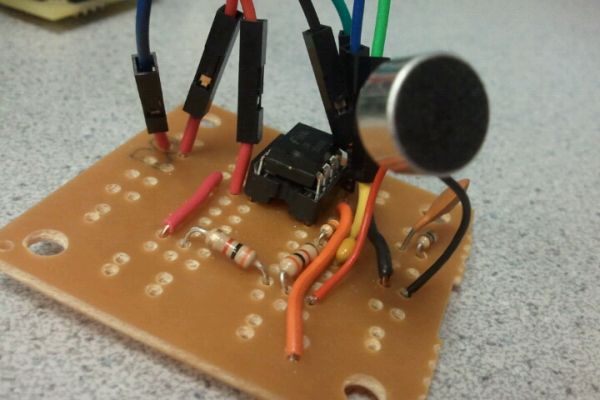

The main structure of our decoder system centers on the mega644 MCU. Our program allows the MCU to coordinate commands being placed by the user via PuTTY and the button panel while analyzing the user’s audio input in real time. On the lowest design level (hardware), we have microphone and a button panel to convert physical inputs by the user into analog and digital signals the MCU can react to. On the highest level, PuTTY displays the operation status of the MCU and informs the MCU of user commands being placed at the command line. PuTTY also offers user the freedom to test the accuracy of our recognition and simulates a security system where the user must say a specific sequence of vowels to see a secret message.

Basically, the first three formant frequencies (refer to peaks in harmonic spectrum of a complex sound) can attribute to the different appeal of vowel sounds.

Therefore, if we can pick out formant by intensities in different frequency ranges, we can identify a vowel sound and use sequence of vowel to generate an audio pass code specific to that vowel.

The Fast Walsh Transform converts the input waveform into orthogonal square waves of different frequencies. Since we are only working with voice ranges here, we set the sample frequency to 7.8K which allows us to detect (ideally) up to 3.8kHz. We also knew that the lowest fundamental frequency of human voice is about 80-125Hz. Thus, we chose a sample size of 64 bit. This generates 32 frequency elements equally spaced from 0Hz to 3.8kHz (not including the DC component). The individual frequency division width is 3.8k/32=118.75Hz which gives maximizes our frequency division usage (since we could have useful information in every division instead of say a division width of 50Hz, where the first division does not provide useful information). Furthermore, this choice also minimizes our computation time since the more samples we have to compute, the more time it will take for the MCU to process input audio data.

In this part, most research we did were based on common vowel characters like ‘A’,’E’,’I’,’O’,’U’, which demonstrated that the method we attempt to develop could achieve. Yet in the real case, we found that the difference of these five characters is not as obvious as simply comparison between frequency sequency could distinguish.

We first use Adobe Audition to observe initial input waveform taken directly from Microphone and AT&T text2speech as shown in the picture. Although the waveform corresponding to the same vowel would result in a similar shape, there still exists difference which we may find more straightforward in frequency domain.

The first program in MATLAB is based on Prof. Land’s code that compares the FFT and FWT outputs as spectrograms, then takes the maximum of each time sliced transform and compares these spectrograms. Top row is FFT power spectrum, FWT sequency spectrum is in the bottom. The maximum intensity coefficient of each spectrogram time slice in FFT and FWT are almost in the same shape. We’ll take one spectrum as an example.

Another program directly implements FFT and show a frequency series. In this figure we can clearly see the resonance peaks of a vowel. This transform is 256 points. Also, notice that because of noise interference, it would be hard to tell apart the second peak for [EE] and this is not the only case.

Parts List:

| Parts Name | Quantity | Source | Cost/each | Cost |

| Mega644 | 1 | ECE 4760 Lab | $8 | $8 |

| Solder Board | 2 | ECE 4760 Lab | $1 | $2 |

| RS232 Connector | 1 | ECE 4760 Lab | $1 | $1 |

| MAX233 | 1 | ECE 4760 Lab | $7 | $7 |

| Microphone | 1 | ECE 4760 Lab | Free | Free |

| LM358 Amplifier | 1 | ECE 4760 Lab | Free | Free |

| Push Button | 3 | ECE 4760 Lab | Free | Free |

| LED | 3 | ECE 4760 Lab | Free | Free |

| Jumper Cables | 10 | ECE 4760 Lab | $1 | $10 |

| Header Pins | 14 | ECE 4760 Lab | $0.05 | $0.7 |

| Resistors | Several | ECE 4760 Lab | Free | Free |

| Capacitors | Several | ECE 4760 Lab | Free | Free |

| Power Supply | 1 | ECE 4760 Lab | $5 | $5 |

| Custom PCB | 1 | ECE 4760 Lab | $4 | $4 |

| Dip Sockets | 2 | ECE 4760 Lab | $0.5 | $1 |

| Total Cost | $38.2 |

For more detail: Voice decoder for vowels Using Atmega644