Introduction:

Error correcting codes are used throughout digital communication systems today. Cell-phones, CD players, satellites, digital pagers and many other communication devices all use varying amounts of error control to achieve a certain degree of accuracy in transmitting information. The idea behind error control codes is very simple; one can insert some amount of controlled redundancy into an information sequence before transmitting it through an analog channel where it will be exposed to noise while on its way to a destination (the receiving end). The extra redundancy will help the receiver (to some degree) detect and possibly correct errors that occurred during transmission.

In our project we focused on implementing a (31, 16) triple error correcting binary BCH code. A BCH code is a type of block code; this means that information is split into blocks of a specified length and each block is separately encoded and decoded independently of the other blocks. In our code the number 31 represents the length of the code which is expressed in bits, and the number 16 represents the number of information bits per block. Thus our (31, 16) code has a redundancy of 31 16 = 15 bits per 31 bit block, which is a little under 50%. In general, the more redundant a code is, the smaller the data rate of the code is, that is the more total data one has to send per information bit.

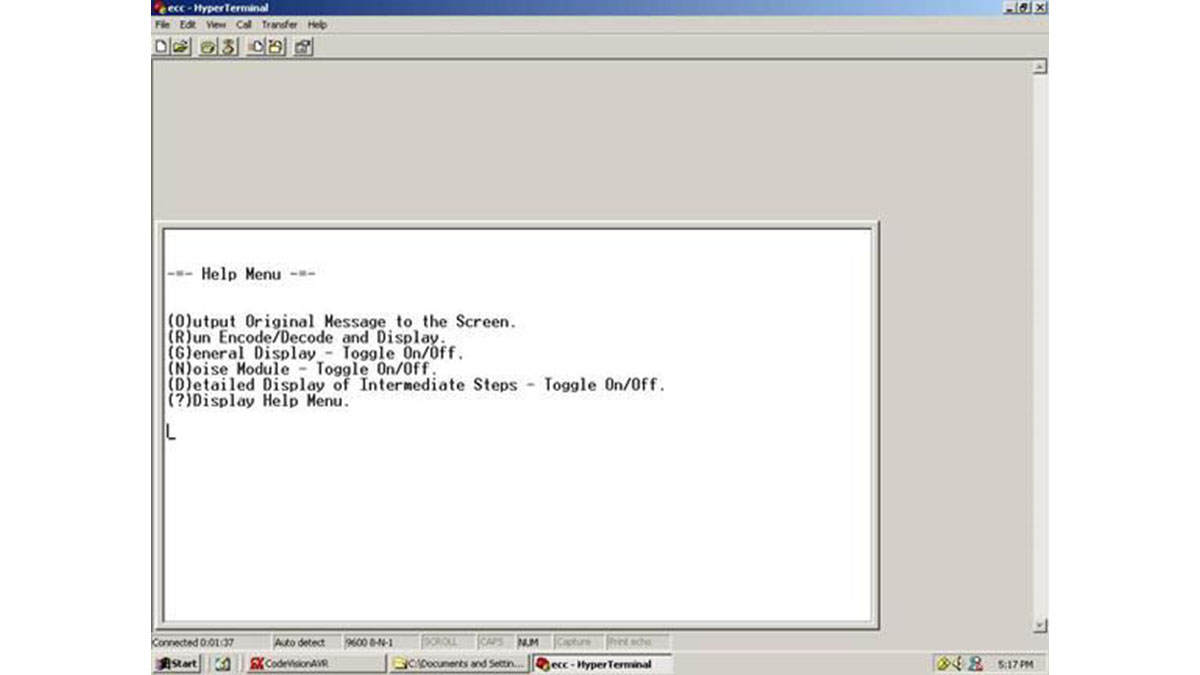

In this project we designed and implemented our (31, 16) BCH code to run on the Atmel Mega32 microcontroller. We interfaced with the Hyperterm program on the computer in order to test our code on real data. When starting the project, we found that it was easier to first program and test our code at home on a C compiler and then later modify the program to work under the Cvavr embedded environment in lab.

High Level Design:

We were inspired to do a project on error control codes when thinking of the countless of times we handled, played with, and passed around burned CDs throughout our college careers. Making our own CD player seemed out of reach so we decided to design and implement an error correcting code that we could somehow run and test on the microcontroller. As a reach, we set the goal of interfacing whatever code we would choose with a wireless (802.11b) network card so that we could simulate a true wireless channel by sending data from the mcu to a nearby laptop. We were not able to reach this goal, since it took us most of the project allotted time just to program and test our code with Hyperterm using a software noise module.

Initially, we had to decide on the type of code that we could implement and turn into a full project. We knew that Hamming codes were very simple to do (besides they could only correct one error per block) and we also looked at some convolutional codes (which seemed to be too computationally intensive for the amount of error correction they provided). In the end we decided to try to implement a binary BCH code which we knew could correct a certain number of errors per block depending on the length and dimension of the code that we chose. Implementing a binary BCH code also seemed interesting because we knew their structure was closely related to the q-ary family of Reed-Solomon codes which are used in todays CD players.

Before delving into the details of our specific BCH code, it is necessary to talk a little bit about the mathematics behind BCH codes.

Error Correcting Codes and Finite Field Arithmetic: A Short Detour

The simplest possible error correcting code (and probably the worst in terms of redundancy) is the binary repetition code. For example in the length 3 binary repetition code, a 0 is mapped to the 000 code word and a 1 is mapped to the 111 code word. This code is highly redundant; it has a data rate of 1/3 meaning that only 1 in 3 bits is needed to perfectly reconstruct the message at the receiver if no errors in the channel occur. At the receiving end, decoding is performed by the majority rule, i.e. if two out of three bits are 0s, the group of three bits get decoded to a zero. Using this code and assuming that the errors introduced by the channel are additive, one can easily see that this code can correct at most 1 error.

That is if more than 1 error occurs in the code word, the decoder will incorrectly map the received data. For example if the original encoded message is 000111 and the channel flips the first two bits and the last one, the received data will be 110110. Hence if the receiver decodes by the majority rule, it will map the first three bits 110 to 111 (the wrong code word) and will also map the last three bits 110 to 111. In the first group the decoder made a mistake and mapped to the wrong code word because the number of errors that the channel added was 2, while in the last group of three bits the decoder again mapped 110 to 111 which happened to be correct since only one error occurred in the 3 bits.

We can measure the Hamming distance between two code words as the number of coordinates in which the two code words differ. In the example above, the Hamming distance of the 111 and 000 code words is 3 since they differ in all three coordinates. The minimum distance of a block code is the minimum Hamming distance between all distinct pairs of code words in the code. The example above suggests that the farther apart (in minimum Hamming distance) code words are, the more errors a code can correct. In general one notices that the length n binary repetition code has a minimum Hamming distance of and can correct all error patterns of weight, where the weight of a code word or error pattern is defined as the number of nonzero coordinates in the code word or error pattern.

BCH codes are a special type of linear cyclic block codes. A code is linear if and only if the set of code words forms a vector subspace over a finite field. For example, a binary linear code forms a vector subspace over GF(2), a field of 2 elements. The structure GF(2) is an example of a Galois or finite field. A Galois field is a mathematical structure that contains a finite number of elements upon which addition and multiplication is defined. A field F forms a commutative group under addition, with the additive identity element labeled as 0. The field F without the 0 element also forms a commutative group under multiplication, this time the multiplicative identity element is labeled 1. A field F also satisfies the distributive property; that is a*(b + c) = a*b + a*c. Some important facts about Galois fields are as follows [1]:

- Every element in a Galois field has a unique inverse (this follows from the fact that a field F forms a commutative group under addition and multiplication).

- The size of the Galois field (i.e. the number of elements in the field) uniquely determines the field. The size of the Galois Field GF() for example, is elements.

- Galois fields only exist for GF() where , where is a prime integer and is a positive integer.

- Every finite field contains a primitive element; that is an element that when multiplied by itself produces all of the other elements in the field. This primitive element is sometimes called the generator of the field. Because the result of a field operation on elements in a field must also be a field element, it follows that in a field GF() the primitive element will cycle back to itself only after multiplications.

- The order of an element , is the smallest positive integersuch that = 1. If is a primitive element, then from point 4 above it follows that the order of is .

- The field GF() where is prime, is formed from the integers {0, 1, 2,., -1) under modulo addition and multiplication. For example, the binary field GF(2) is formed from integers in the set {0, 1}. Addition modulo p is as follows: 0+0 = 0, 0 + 1 = 1 + 0 = 1, b0ut 1 + 1 = 0 (mod p). Multiplication is as usual.

A linear block code satisfies the property that the linear combination of any subset of code words is also a code word (this makes sense since the set of code words forms a vector subspace, implying closure under field operations). Linear codes have a specified block length and dimension; for example an (n, k) linear code over GF(2) has length n and dimension k. The length of a code is just the number of coordinates in each code word of the code. The dimension k of the code is the dimension of the vector space of the linear code. Thus the number valid code words of a (n, k) linear code over GF(2) is.

Another way of seeing this is to think of a message of length k being encoded into a code word of length n (n > k ensures that redundancy is added to the message). If the message is in binary (i.e. over the field GF(2)), and each message produces a unique code word, we can see that the total number of valid code words is exactly, corresponding to possible messages. The code words of the code form a subspace over the vector space of all n-vectors (that is over all possible n-tuples).

For more detail: Implementation of a (31, 16) BCH code on a Microcontroller