We have created a device that interprets the NTSC video signal from the video game Rock Band and outputs audio signals via a pair of speakers to simulate a human singer playing the vocalist part.

We chose to pursue this project since we were interested in doing a project related to music. We were inspired by the automated Rock Band guitarist from last year’s ECE 4760 projects. People criticize Rock Band guitarists, saying that they should learn how to play a real guitar. Playing the vocal track on Rock Band requires actually being able to sing. We thought the idea of being able to emulate a human singing based solely on reading a few lines on the screen was pretty cool, so we decided to pursue this idea.

High Level Design

Ever since the release of Guitar Hero and online statistics comparing players based on their scores, people have been trying to gain an advantage by creating an automated player that would consistently achieve perfect scores. It wasn’t until Rock Band was released that vocals as an instrument were introduced, and people were much less inclined to automate singing as everybody could hear your automated singer and would know you were cheating. However, just because the automated singer can’t be used for improving your online statistics doesn’t make it any less interesting. Ultimately, the goal is to automate the functional equivalent of a person humming along to a song, and anything related to copying what we can do inherently as humans is cool.

The basic premise is simple: sing a note (in the form of a sine wave of a specific frequency), determine how far off we are from singing the correct note, adjust the frequency of what we are singing, and repeat. Since Rock Band only requires that the note’s pitch be correct, we can sing using sine waves rather than words! In order to accomplish this, we need to analyze the video signal to determine where we are singing (indicated by the white “puck” on the left side of the screen) and where we should be singing (looking ahead at the upcoming notes). Depending on the magnitude and sign of this positional difference, we can adjust our output signal’s frequency accordingly, and repeat this to sing.

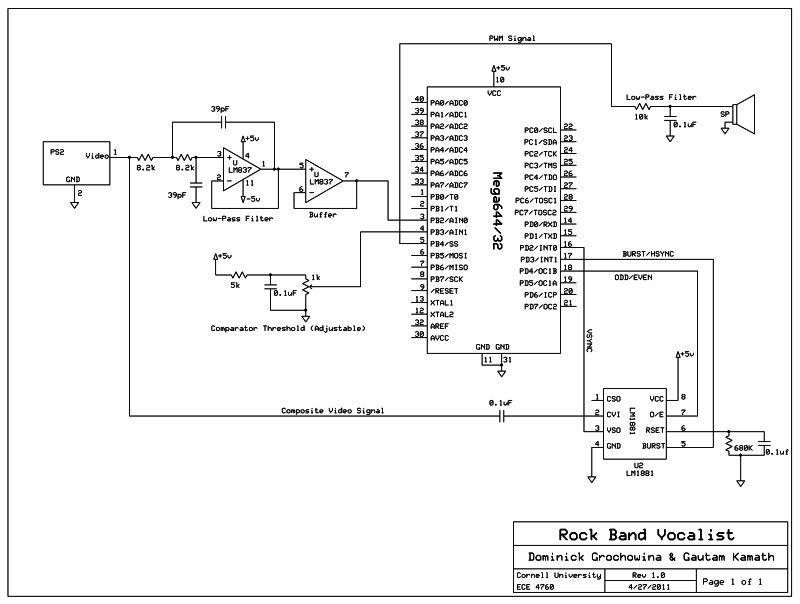

Taking the composite video signal from the Playstation 2, we pass this signal to the TV (so we can watch as we play) and to our circuit. The video signal is filtered to remove color and is passed into the analog comparator in the MCU, where it is compared against a threshold so we can determine when we are reading a line with a note present (a note on the black background will appear bright and exceed the comparator threshold). The video signal is also sent into a sync separator chip that generates the video reference signals we need to determine what line/frame we are reading at any given time.

In order to analyze the video signal, we need to interpret the NTSC color standard in which the video is encoded. The NTSC standard consists of about 60 video frames per second (or 30 interlaced frames per second), with each frame containing 525 lines (486 of which are visible, 39 used for synchronization/retrace). This gives us an average line speed of (60 frames/second) * (525 lines/frame) = 31500 lines/second, or 32 us/line. Using a 20MHz clock, for a cycle time of 50ns, we have roughly 600 cycles per line for computation. Thus, this project is certainly achievable using the ATMega644.

Hardware

Since we are playing Rock Band on a Sony Playstation 2, we used the standard composite video output of the Playstation 2, which outputs video using the NTSC standard. In order to correctly analyze the video signal, we need to extract the sync signals (VSYNC and BURST) to keep track of when a new frame and line begins, respectively, as well as an odd/even bit, to determine which specific line we are reading since the video signal is interlaced.

Video Signal

The first step is to filter the video signal so that it is easier to analyze before passing it into the MCU. The NTSC video signal, transmitted over ~5.5Mhz, is encoded using luminance and chrominance (for brightness and color, respectively). The luminance is transmitted over the entire channel, while the chrominance is transmitted at ~3.5MHz. Since we are solely interested in the vocal signal (which appears as a green track on the black background), we do not need to differentiate between colors, but rather are only interested in detecting the presence of the vocal track at a certain position on the screen. This can be accomplished with just the luminance signal, which is essentially a black and white signal after the chrominance has been filtered out.

This simplifies things greatly, as the luminance signal can be extracted by simply passing the video signal through a low-pass filter with a cutoff around 2MHz. Some high frequency detail is lost, but it is unnecessary for our task. Additionally, it would be very difficult to extract the chrominance, as the MCU would have to have its frequency synchronized with the carrier frequency as the chrominance is encoded using QAM (quadrature amplitude modulation).

We opted to use an active Sallen-Key low-pass filter as we wanted unity gain, and the gain dropoff from two poles at our cutoff frequency. It was necessary to find an op-amp with a high enough unity gain bandwidth to filter our signal. We found the LM837 in lab (low noise with 25MHz GBP) and decided to use it rather than ordering an expensive high speed video op-amp that would work equally well. To get rid of ripples in the power supply showing up in the filtered output, large decoupling capacitors (0.1uF) are placed between +5V/-5V and ground (not shown).

Reference Signals

Now that we have a clean video signal feeding into the MCU, we need to analyze specific points on specific lines. To do this, we needed to extract the sync reference signals (VSYNC, for a new frame, and BURST, for a new line). We had initially intended to design this circuit ourselves (using a state machine and edge detection), but after taking a look at last year’s Automated Rock Band Player project, we decided to use the same chip, the LM1881 Video Sync Separator. This chip takes the AC coupled composite video signal, and extracts the sync signals, as well as an odd/even signal, since the video signal is interlaced. These reference signals are sent to the MCU so we can determine the individual lines we are reading at any point in the video signal.

Parts List:

| Part | Source | Unit Price | Quantity | Total Price |

|---|---|---|---|---|

| STK500 | lab | $15.00 | 1 | $15.00 |

| Mega644 | lab | $8.00 | 1 | $8.00 |

| Color TV | lab | $10.00 | 1 | $10.00 |

| Speakers | lab | $0.00 | 1 | $0.00 |

| white board | lab | $6.00 | 2 | $12.00 |

| LM1881 Sync Stripper | DigiKey | $3.11 | 1 | $3.11 |

| RCA Splitter Female to Male | Amazon | $2.99 | 1 | $2.99 |

| 2 RCA Male/ 2 RCA Female Extension | Amazon | $7.53 | 1 | $7.53 |

| PS2 + accessories | previously owned | $0.00 | 1 | $0.00 |

| Rock Band Game | previously owned | $0.00 | 1 | $0.00 |

| LM837N | lab | $0.00 | 1 | $0.00 |

| 10K Potentiometer | lab | $0.00 | 1 | $0.00 |

| 1K Potentiometer | lab | $0.00 | 1 | $0.00 |

| External Power Supply (Proto-board PB503) | lab | $5.00 | 1 | $5.00 |

| 0.1 uF capacitor | lab | $0.00 | 3 | $0.00 |

| 0.47 uF capacitor/td> | lab | $0.00 | 2 | $0.00 |

| 39 pF capacitor | lab | $0.00 | 2 | $0.00 |

| 10k Ohm Resistor | lab | $0.00 | 1 | $0.00 |

| 8.2k Ohm Resistor | lab | $0.00 | 2 | $0.00 |

| 5.1k Ohm Resistor | lab | $0.00 | 1 | $0.00 |

| 680k Ohm Resistor | lab | $0.00 | 1 | $0.00 |

| Wires + alligator clips | lab | $0.00 | several | $0.00 |

| Total | $63.63 |

For more detail: Rock Band Vocal Bot Using Atmega644