Introduction

Objective

The goal of this project is to design a useful and fully functional real-world product that efficiently translates the movement of the fingers into the American Sign Language.

Background

The American Sign Language (ASL) is a visual language based on hand gestures. It has been well-developed by the deaf community over the past centuries and is the 3rd most used language in the United States today.

Summary

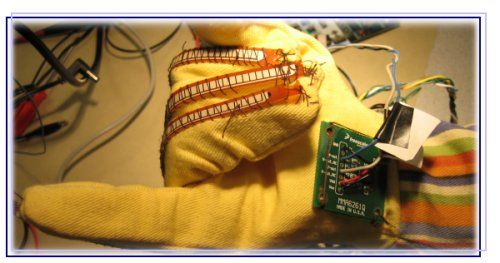

Our motivation is two-fold. Aside from helping deaf people communicate more easily, the SLC also teaches people to learn the ASL. Our product, a sign language coach (SLC), has two modes of operation: Teach and Learn. The SLC uses a glove to recognize the hand positions and outputs the ASL onto an LCD. The glove detects the positions of each finger by monitoring the bending of the flex sensor. Below is a summary of what we did and why:

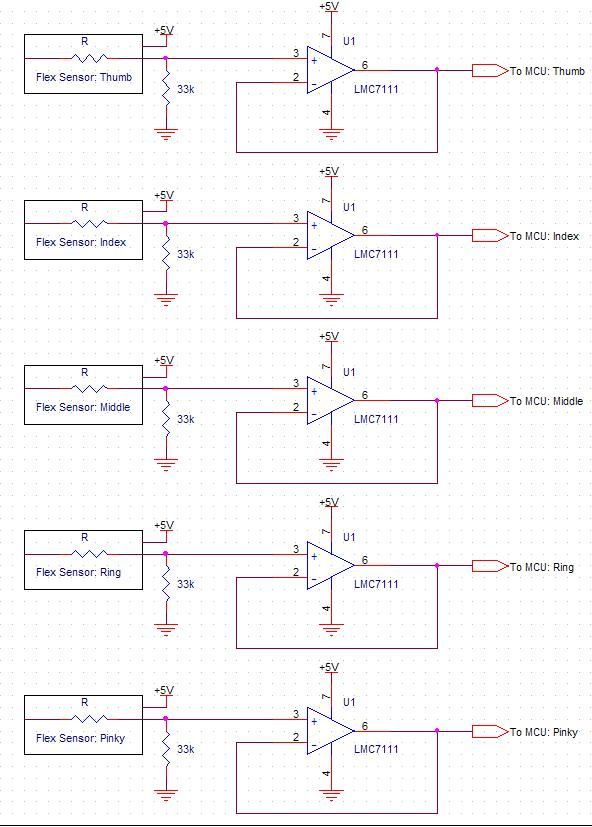

1) Build flex sensor circuit for each finger. Sew flex sensors and accelerometer onto glove to more accurately detect the bending and movement of thecomponents.

2) Send sensor circuit output to MCU A/D converter to parse the finger positions.

3) Implement Teach mode. In Teach mode, the user “teaches” the MCU ASL using hand gestures. To prevent data corruption, A/D converter output and the associated user specified alphabet are saved to eeprom, which can only be reset by reprogramming the chip.

4) Implement LEARN mode. In Learn mode, the MCU randomly chooses a letter it has been taught and teaches it to the user. The user ”learns” by matching his hand positions to that which the MCU associated with the letter. Using the LCD, he can adjust his finger positions appropriately. The finger positions are matched to the appropriate ASL using an efficient matching algorithm.

High Level Design:

Rationale and Sources of Our Project Idea

There is currently an abundance of software in the market that is used to help teach people sign language.This software is very effective; however, it is very hard to know if you are doing a sign correctly and the same way every time.Current products in the market are not very interactive.In order to check yourself, you have to look at a chart of hand positions on the computer screen to check if you have signed a letter correctly.We wanted to make a project that would help people practice and learn sign language without having to look at a screen every time to check the sign for precision.

For our project, we decided to move away from such software and use a glove in order to implement an interactive sign language teaching program.Our project is called the Sign Language Coach We believe that, with the use of a glove, signs can be made with more accuracy and better consistency. Having a glove also would enable students to practice sign language without having to be next to a computer. Our project would be portable to all those who wanted to practice sign language.

The concept of a sign language glove began with a high school student who won the Intel Competition in 2001.His idea spurred so much interest that sign language gloves are still being researched and developed for the purpose of translation.The sign language glove seems to be a very useful tool to aid in communication with the deaf.A professor at George Washington University has received a grant from the government in order to research the capabilities of the glove.We believed that such a promising translator could also be an effective teacher to those who want to learn sign language.

Logical Structure

Our glove is similar to the other gloves that have been researched and developed. We have created a glove that learns different signs and saves these signs into the EEPROM of the microcontroller. Our implementation of the glove only deals with the 26 letters of the English alphabet that can be directly translated into American Sign Language (ASL).The part of our project that is different from other gloves is that after programming these letters into the microcontroller, letters are chosen at random for the student to practice and learn.The LCD display is used as a reference for how much more or less you need to bend each finger to correctly sign a letter.The student must then adjust their hand position to match the prompted letter within some specified range in order to be able to move on to the next letter.

In order to use our product, the user must connect the Atmel 32 Microcontroller to the computer and use Hyper Terminal to program in the different hand positions of the alphabet.There is a black flip switch that should be turned on in order to signify TRAIN mode. A yellow LED will light up in order to signify that the student is in the right mode.In order to input the position, a letter must be pressed on the keyboard followed by the ENTER key.Following that, the position of the letter must be held for approximately 10 seconds.The user is expected not to know ASL and can use a table of sign language letters for reference (thereby only having to use the computer once) or call in an American Sign Language expert to help the student perfect the letters of the alphabet.

After all the letters are programmed in, the black switch can be flipped and the yellow LED will be off thereby putting the microcontroller into PRACTICE mode. At this point in time, the microcontroller can be removed from the computer, and the unit can be taken anywhere. The user can then start practicing positions by looking at the LCD display as a reference.Using the LCD, the user will be able to adjust his or her fingers in order to try to match the letter that appears on the screen. Once the position of the hand matches the letter on the screen, MATCH!will appear on the LCD and the next letter will appear on the LCD.

Hardware/Software Tradeoffs

There were a lot of trade offs between hardware and software.One of the major tradeoffs was that software has little portability.It is very necessary to have a computer nearby in order to run software.We avoided the use of the Hyper Terminal in PRACTICE mode because of the lack of portability.Using software can increase a lot of the capabilities of a sign language teacher.The software programs currently in the market are easily able to have animations of different words to help teach the student with a multitude of sound capability.By using mostly hardware, implementing words and sound into our program proved to be much more difficult.With software, a lot more storage capacity is available to program in many different features.We were unable to implement sound because of the lack of memory on the Atmel 32 Microcontroller.

Relevant Standards

There are very few standards that are related to our project.The one more indirect standard related to our project is the RS-232 Serial Standard that we use to program our device with the different letters using the Hyper Terminal.The rest of our project is based more upon the flex sensors and accelerometer and their outputs to the analog to digital converter which currently do not have any standards directly related to them.

Relevant Patents

Jameco has a patent on flex sensors which we used 5 of in our project; however, this does not conflict with the interest of our project.We are simply using their product in order to implement our glove.

Program/Hardware Design:

Program Details

Tasks

This is an outline of the important tasks implemented in our code.

- task1()

Runs every 10ms

Determines mode (Program or Train)

- Program

- User specifies a letter to be programmed

- Read in flex sensor circuit outputs

- Wait 3 seconds

- Collect 6 samples discard first average remaining 5 samples

- Store average of samples into EEPROM for each letter

- Train

- Randomly generate a letter to be prompted to LCD

- Read in flex sensor circuit outputs for five fingers

- Read in accelerometer sensor output for movement

- Try to match hand position to programmed letters

- If found

- Display percentage differences to LCD

- Display match

- If not found

- Display percentages

- If found

- task2()

Runs every 1000ms or 1s

Used for transmit between MCU and hyperterm

- task3()

Runs every 10ms

Used for debouncing mode switch

- task4()

Runs every 1000ms or 1s

Used for to delay system by 1s

Important Functions

This is an outline of the important functions implemented in our code.

void gets_int(void)

span style='font:7.0pt "Times New Roman"'> Receive

Sets up ISR, which then does all the work of getting a command

void puts_int(void)

- Transmit

- Sets up ISR, which then sends one character

- ISR does all the work

void calc_percent(int my_letter, int pos[5])

- Calculates finger % differences between signed letter and prompted letter

- % for each finger = ((actual current)/actual) * 100, where actual represents the values stored in EEPROM

void plusminus()

- Displays +/- to LCD to indicate which way to flex each finger

- Negative (-) % means bend fingers to get correct positions

- Positive (+) % means straighten fingers

int match(int pos[])

- Matches finger positions to appropriate letter in master alpha matrix

- Returns int of letter matched (a=0, z=25) or NOTFOUND (-1) if not found

void set_values(letter l, int arr[])

- Stores programmed finger positions into master alpha matrix

- Also records order of letters saved to EEPROM in index_set

Major Issues

Below are several main issues, which we encountered during the programming of the SLC, and how we resolved them.

TEACH mode. Instead of hard-coding the five finger positions for each ASL letter, we implemented a Teachmode in which the MCU stores the users finger positions and associate it with the user specified ASL letter. The issue with this mode is that the users finger positions are not perfectly still during the storing process. To resolve this issue, we allow the user 3 seconds of preparation time. In addition, we take four consecutive samples of his hand positions and average the values of each finger position to more accurately detect the sign language.

Jameco has a patent on flex sensors which we used 5 of in our project; however, this does not conflict with the interest of our project.We are simply using their product in order to implement our glove.

printf(“Set up your hand position…\n\r”);

printf(“You have 3 seconds to prepare your hand…\n\r”);

task4(); //delay 1 seconds

task4(); //delay 1 seconds

task4(); //delay 1 seconds

printf(“Begin calibrating…\n\r”);

//parse ADC0-ACD4

for (j=0; j < 6; j++) begin

for (i=0;i

<5;i++)begin > ADMUX=0x60+i;

Ain = ADCH; //get the sample

ADCSR.6=1; //start another conversion

while(ADCSR.6==1);

pos[j][i]=Ain;

end

task4();

end

printf(“End Calibrating…\n\r”);

Re-TEACH. If the user needs to modify the hand position of a certain letter, he can overwrite it by re-recording his hand position and the associated letter. However, once he finishes teach mode and programmed the chip, he cannot re-teach the ASL to the MCU because the data is stored in eeprom. This means that the data can only be erased by reprogramming the chip, which prevents unintentional data corruption.

Parts List:

| Item | Price |

| Mega 32 | $8.00 |

| Last year’s board | $2.00 |

| Glove | $2.00 |

| Accelerometer | $0.00 |

| LCD | $0.00 |

| MAX233ACPP RS232 driver + RS232 connector | $8.00 |

| Opamps | $6.55 |

| Breadboard | $12.00 |

| 9 V Battery | $4.00 |

| Flex Sensors | $0.00 |

| Total | $42.55 |

For more detail: Sign language coach Using Atmega32L